Apple's Vision Pro isn't a product for most of us or even me... Yet.

But it will revolutionize personal computing as we know it.

I let the dust settle this week before writing about Apple’s Vision Pro, their first and highly-anticipated foray into AR/XR technology. Dozens of articles by technology writers that are far more notable than I have had the opportunity to use the device and its 3D visionOS operating system during its launch at WWDC 2023 at Apple HQ in Cupertino, and I felt that having a hot-take reaction was not only going to get buried in terms of overall coverage but that it would just be superfluous.

I needed time to analyze this and consider what it meant to me, you, and the industry.

I wasn’t at the Vision Pro WWDC event, so I haven’t had the opportunity to look at the product close-up. Like many of you, I watched the stream and read everyone else’s coverage. The initial reaction that many in the technology community have had has ranged from this being a mind-blowingly disruptive and transformative product that will change personal computing forever to outright visceral dismissal due to its $3499 launch price and also the product’s limitations, which we will get to in a bit.

About the price

Yeah, that price. It’s not a mass-market consumer product at launch, not one that I think the mainstream will bite on for some time. Vision Pro will not be available until sometime in 2024, so there will be a lot of armchair anticipatory analysis and discussion of whether or not Apple will reconsider that price point. It’s certainly too expensive for me to even think about buying the first generation because I am not a software developer with a keen interest in porting and monetizing apps to that new visionOS platform. I also don’t think I’ll be writing enough about it and the software written for it heavily to justify an exploratory purchase.

But will it change the technology industry? No question. Let’s start with the fact that the real product launched is not the Vision Pro headset but an entirely new computer class – spatial computing – that runs on an operating system that will likely power it for the next ten years and more.

The technology and industry implications

We only have to look at the success and history of iOS – which launched in 2007 and has begotten not just many iterations of the iPhone but derivatives running on iPad, Apple TV, and the Apple Watch – for the last 16 years as an example of how this tech ended up being used in every Apple product. So we’ve seen the cross-pollination of iOS occur in all of these things, even on the current generation MacOS Ventura, and even more so, the MacOS Sonoma that was also announced at WWDC. I think we can expect the same from visionOS over time.

visionOS has a 3D, human-centric immersive interface that runs in both augmented (overlaid over your field of vision) and virtual/immersive, compatible with not just a large library of existing iOS/iPadOS apps but also can interface with Mac apps – in remote desktop mode. It also can run PC games ported from Windows using the WINE open source technology with native performance and Unity 3D apps as well.

There have certainly been other less-expensive XR/VR/AR headsets targeted towards consumers, such as Oculus/Meta Quest and PlayStation VR, and vertical market products in similar and even higher price points, such as Microsoft Hololens and Magic Leap (which has undergone billions of dollars in investment by NTT, AT&T, Google, and others). But none have had nearly as much functionality and capability as this product at launch and are, by all measurements, outdated. So I think anyone making a product priced anywhere close to this for vertical market use just got put on notice.

The vertical market application ecosystem will be necessary for gaining initial market share for Vision. While much of what Apple showed is gaming and entertainment in terms of the “wow” factor, I do not doubt that the first adopters will be industries such as medical imaging and visualization, aerospace and automotive, engineering, architecture, and related fields. And to these customers, Vision’s $3499 price tag and its use-case limitations are not an obstacle to any of these buying thousands or even tens of thousands of units each. Apple will sell at least a million units in the first year worldwide, to primarily this customer base.

The social implications

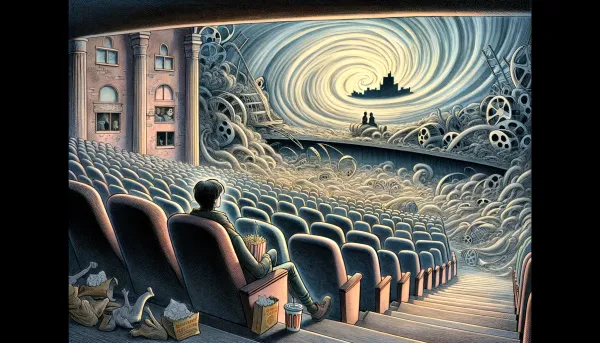

Let’s talk about those limitations a bit. At over 1 pound with the optional top head strap, it’s not what you want to wear for hours unless you sit down, let alone all day. You also cannot use it all day unless it is directly connected to AC power because it requires a tethered external battery pack which has a maximum run time of about two hours, which is less than the runtime of many full-length films – let alone Avatar 2 that I watched this weekend, which is 3 hours and 12 minutes long. So that use case depicted with the wearer on an aircraft watching movies for hours at a time? There better be AC or higher-wattage USB PD ports in her seat.

So I don’t think there is any real threat of there being the equivalent of “glassholes” for Vision Pro beyond the occasional elitist jerk bringing one into a Starbucks or an airport lounge for a few hours; nobody in their right mind is going to walk around in public with one unless it’s for aYouTube or TikTok stunt, which I am sure we will see initially.

What it means for work

There was no discussion of whether or not Vision Pro has 5G connectivity, so it’s assumed that this first version doesn’t have built-in mobile communications chips at all, that it would need to rely on a Wi-Fi tethered iPhone or a Mac for internet connectivity unless directly connected to a high-speed Wi-Fi network. So that leaves the device in offices and homes with good broadband – 1Gbps plus. There’s no doubt in my mind that the fully immersive maps with 3D models will need excellent connectivity to truly make the most of the hardware unless all the data is loaded locally on the device instead of being loaded on the fly.

We also don’t know the extent of the actual computing power of the device. Yes, it has an M2 chip (not the recently-announced M2 Pro or an M2 Max) and a specialized R1 spatial processor to offload the functions of sensor input computation. However, the M2 comes in different flavors; we don’t know precisely yet which one, but the base-level one used on the Macbook Air is 8GB RAM, with 8 CPU cores, in a 4X4 configuration of performance and efficiency cores, and with either 8 CPU or 10 GPU cores. There are also 16GB or 24GB RAM versions. Onboard SSD on equivalent Mac desktop and laptop systems varies between 256GB to 2TB.

But given the two-hour battery life, my guess is that it is configured closer to something like an entry-level Macbook Air, with either 8GB or 16GB of RAM – any more than that, I think would probably be overkill, given the types of iOS and iPad apps that are meant to run natively on it. Mac apps, such as Adobe Suite or Final Cut, run on a virtual “display,” essentially a windowed remote desktop connection to a more powerful Mac running the application software over WiFi.

But even with these limitations, I see a lot of interest in this product going forward as software developers fully exploit what this thing can do, and economies of scale come into play when it costs closer to $2000 or $1500 per headset. This may take three years or even five years, but there’s no question that’s going to happen.

How it will impact standards for charging and data transfer

One of the immediate implications of this is charging standards. The Vision Pro uses a round magnetic connector to attach to its external battery or charging cable. Presumably, that charging cable uses USB Power Delivery (PD) in the area of 35W or higher to charge the unit’s much smaller internal standby battery so you can hot-swap power connections without interruption.

So not only will we see that round magnetic charge connector used on other Apple products, such as possibly the next-generation iPhone Pro/Max 15 or iPhone 16, but a combination of it and high-speed wireless data transfer will almost certainly replace the Lightning connection. Lightning is now long in the tooth as it is limited to 18W and USB 2.0 data speeds; it’s why Apple ditched it on the iPad Pro.

If we see Apple switch USB-C/USB PD on iPhone 15, we will need many more USB-PD charging outlets to accommodate that, Vision Pro and other AR headset products, and of course, all the Android stuff and other consumer electronics out there that now use USB power connection. If I were a hotel or even a consumer, I would start thinking about retrofitting AC-only outlets with ones that have high output USB PD ports. Also, I think we will need ways to get power to our furniture, such as living room couches when using headsets like Vision Pro if we really intend to use them for hours at a time. The Ankers and the Belkins of the world will need accessory solutions for that soon.

What it means for content consumption and the metaverse

I also think it’s unrealistic to think that a family of four or more will own multiple of these devices, even at $1500 or $1000 each, at least for the next ten years. But conceivably, a Vision could replace an iPad and a Mac, and indeed a 4K TV set, for a single person in a small apartment, and it potentially changes the value of what “space” means because if you can experience a personal, 100’ screen in a small, enclosed room, there’s no need for $3000 85” Samsung or LGs anymore unless you have multiple people that want to watch a movie at the same time.

Of course, there’s a comfort aspect of wearing a 1-pound plus headset to experience content with a VR product like Vision instead of just chilling on your couch and looking at the big screen on the wall. Still, I expect that there will be enough advances in materials and such that successive models will get increasingly lighter. I postulated what an AR/VR-centric lifestyle in 2034 might be like in a speculative article I wrote for ZDNET back in 2013. The technology I predicted, which is implant-based, is very far off, but if you apply some of these ideas to the Vision Pro and its successors, some of this stuff starts to make sense.

One notable omission I noticed from Apple’s Vision Pro launch is the absence of any discussion of “Metaverse”, or immersive, collaborative VR “worlds”. This is clearly where Apple differs from what Meta views as our AR/VR future. I think that is notable – Apple sees the Vision as a product that runs apps that is used as a workplace tool or for content consumption, whereas Meta sees the Metaverse itself as a world that we live in and interact with others using VR. Conceivably, Meta and others could port their “Worlds” to VisionOS so that they become content providers and that their hardware isn’t tied to these worlds, per se. Indeed, Disney’s involvement in the product launch for Vision Pro means we will see Marvel and Star Wars “worlds” that are more along the lines of theme park style entertainment and multiplayer games rather than the kind of full-time immersion that Meta wants us to experience.

Apple, building their own App Store specifically for Vision, can take their usual cut of everyone’s “world” that requires micropayments to transact, which certainly is an interesting strategy — although the EU has now set rules that potentially will require Apple to permit “sideloads” of competing applications and app stores — which we are likely to see first introduced with the release of iOS 17.

These are just my earliest thoughts on Vision, and I am sure I’ll have other things to say about it as we get closer to an actual product release. I’m excited about the new platform's prospects, even though I am not ready to own one just yet.